A Primitive Parametric

Contemporary research on the human genome has directly influenced new biological metaphors for architectural design. The architectural historian Martin Bressani has summarized this tendency as a new form of “Architectural Biology,” which the critcs Reinhold Martin and Manuel Delanda have related to historical paradigms of architectural organicism. 1 The intent of this paper is to discuss the biological metaphors that translate the generative principles of genomics into new strategies for architectural design.

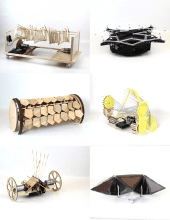

Computational Methods Robotics

Like the language of machines that inspired Le Cobusier a century ago, these projects creatively explore how the language of robotics could inspire a new generation of architects spatially and formally, encouraging future architects to imagine smart environments, with the intelligence and behavior of animate buildings. Working in groups, students developed a robot based on a natural system exemplifying various approaches for how to define and implement the distinction between natural and artificial systems as a driver for computational design. During the final exam debut performance, each project was introduced by an announcer as each uniquely designed, fabricated, and programmed robot strutted their computational prowess while walking, rolling, even dancing down the salon length catwalk in their morning debut. The project requirements included a bluetooth controlled robot, which exhibits the two primary attributes of a robot: the ability to be reprogramed and a complex range of motion. Thus each robot had to have the ability to move forward and backward and be able to turn. Additionally, it had to have a physical transformation related to the study of a natural system. Students integrated the specific contextual parameters for material processing and performative parameters to develop computational processes for transformation. During the final exam debut performance, each project was introduced by an announcer who read the project description as the robot moved down the runaway that spans half the length of the Storrs Salon. This performance showcased the range of motion and design attributes of each robot.

WHISPERS: An Interactive Sound Installation

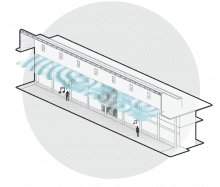

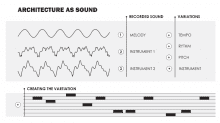

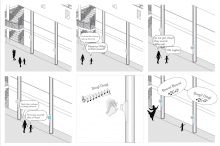

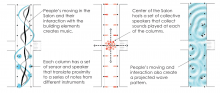

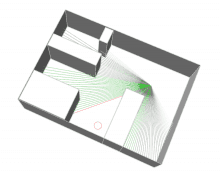

Whispers is about exploring how to transform a static building into a dynamic space, by integrating music and architecture. People’s presence in space and their movements and interactions with the space can lead to the creation of both individualized experiences with the sound as well as a collected representation of the sounds.

This offers unique ways to capture occupants presence as they make their way through a building. When walking alongside the colonnade in the Storrs Salon, occupants can begin to hear “whispers” of low volumed sounds responding to their presence. Here, architectural elements are the instruments and the occupants are the musicians.

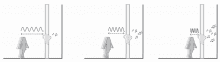

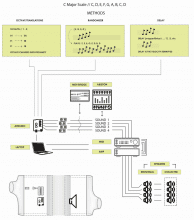

This system uses a sensor-speaker set at each column. The sensors take the distance from person interacting with the column and then translates that distance to musical notes and variations through coding with microcontrollers. [1]

The trouble becomes how to create a “controlled chaos”. We used a randomizer, a change in measured delays, and a translation from one octave to another while remaining to notes all in the same key of C. With this method we found consistency while also allowing for chaos and the unexpected to occur.

Noushin Radnia Presented at the 16th Annual Hawaii International Conference on Arts and Humanities

DesComp Instagram!

Check out our new(ish) instagram page for updates on our program and students.

https://www.instagram.com/descomp_uncc/

Dimitris Papanikolaou joins the Design Computation Faculty

We are pleased to announce that Dimitris Papanikolaou is joining the faculty at UNC Charlotte in a tenure-track joint appointment in the School of Architecture (SoA) and Department of Software & Information Systems (SIS). Dimitris recently completed a position as a Postdoctoral Associate in the Graduate School of Design at Harvard. He holds a Doctor of Design (DDes) from Harvard, an MS in Media Arts and Sciences (Media Lab) from MIT, an MS in Design and Computation from the School of Architecture at MIT, and a Diploma from the National Technical University of Athens. Dimitris will bring with him an Urban Synergetics Lab that will operate at the intersection of Architecture, Computation, and Information Systems with a mission to explore new cooperative models of architectural/urban intelligence in support of the DesComp program and more broadly architecture and computation. While Dimitris will initially focus on urban mobility systems, he expects to expand his work in architectural areas such as the Internet of Things and intelligent structures, working on projects related to computational design and digital fabrication

Animal: An Agent-Based Model of Circulation logic for Dynamo

By Christian Sjoberg, Chris Beorkrem, Jefferson Ellinger, and Alireza Karduni- Presented at ACSA 2017

Link to full paper

Computational tools used by designers exist within an ecosystem of software providing packages of functionality to the user. The ultimate goal of this project is to create an agent based modeling tool which can provide designers with a means of integrating human movement into the computational design process. In order to maximize the usability of the tool, this project has been developed as a package for Dynamo, the scripting environment for the BIM software Autodesk Revit. This tool was written primarily in Python programming language using the Dynamo API with some additional functionality provided by standard Dynamo components. This allows designers to use this tool within the context of scripts or graphs which they may already be producing during the design process.

Each type of object in a designer’s model may have different effects on the behavior of agents navigating the space. At their most basic level, walls serve to impede the movement of an agent, furniture impedes movement but not visual perception, and circulation elements such as stairs and elevators may serve as attractors pulling agents through a lobby towards levels above. Additionally, certain programmatic elements may attract or repel the agents based on their desires. This package for Dynamo will provide designers with a method for defining and integrating the effects that these elements may have on occupants into the computational processes they use for design.

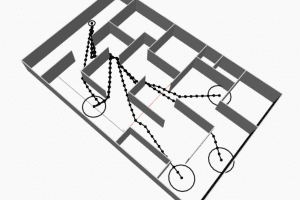

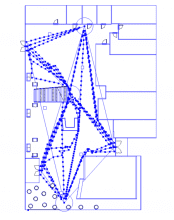

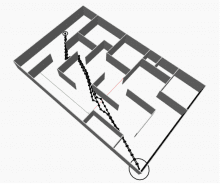

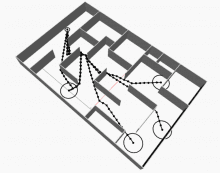

This agent based tool requires four primary inputs. First, the user specifies the quantity of occupants that they wish to simulate. Agents must then receive a list of possible starting states. This is provided as an input to the tool in the form of a list of point locations. Third, the system requires a list of point locations to serve as the agent’s possible destinations. Starting and ending locations are then randomly matched in order to provide a location-target pair for each agent in the simulation. Finally, the user provides the system with an array of solid geometries defining the environment for the agents to occupy. These geometries are selected by the user from their Revit model. The user must also define the appropriate response to the agent to the objects provided. Some additional settings for agent speed and other variables can be manipulated by the user as well. These inputs create the basic conditions for the simulation.

In order to simulate the movement of occupants in a space, the system relies on a set of algorithms which provide the agents with a method for handling the presence of obstacles in their path. An agent’s ability to select an appropriate direction for movement relies primarily on information present within its field of vision. This field is created as an array of vectors radiating from the agent’s current location. In order to approximate the human field of vision, a set number of these vectors are arrayed at even intervals from the direction of the agent’s last step up to ninety degrees in either direction. Each array in the agent’s field of vision is cast out to the environment and returns a list of intersections with objects. The distance from the agent to the nearest intersection in the list provides the value for that vector of the agent’s vision. This calculation is repeated to form an array of distances which the agent can “see” at each interval of its vision. This creates the equivalent of two-dimensional vision consisting of a line of pixels with a brightness representing the visible distance in each direction. Using this basic vision, confined to a two-dimensional plane, the agent gathers basic distance information about its environment.

Once this information is collected for the agent’s current location, it must evaluate its possible movement vectors against reaching its target location. This is accomplished by scoring each vector of the view array. The view distance of the vector is multiplied by the inverse of the angle between the view vector and a vector to the agent’s target location. Distance values are normalized as a value between zero and one. The values for the angle between the view vector and target vector are also scaled to a value between zero and one. This ensures calculations reflect scoring relative to possible options by normalizing the values. These values are then be multiplied by a weight coefficient. This allows for adjustment of which factor the system will prioritize when selecting the appropriate movement vector. Weights in the system include user defined variables for agent behavior and response to specific object types. This formula effectively ensures that each view vector is scored by its chance of moving the agent toward the target without coming in contact with an obstacle. (Figure 2)

The algorithm defines the agent’s path by repeating this process of vector casting and scoring from each position which the agent moves to. The speed at which the agent travels during the simulation is defined by the user. This is then divided by the number of iterations that the system goes through per second to determine the appropriate distance to move the agent at each iteration.

In order to prevent the system from calculating only one possible solution for each starting point to target pair, the weight coefficients of both distance and direction factors are multiplied by a random value within a specified range. Each agent receives a specific set of weight coefficients which they will use to score at each iteration along their path. This helps to simulate the variations in behavior between individuals when navigating space.

In addition to this basic movement algorithm, agents are also repelled from objects that are less than a user specified distance from them. This helps to prevent the agent from occupying only the shortest path between the starting location and the target. For example, an agent who is rounding a corner to reach a target may see the greatest distance and angle at the edge of the obstacle corner. This would then result in a consistently high score along that vector. In order to prevent all agents from turning at the sharp corner of the object, the agent will be repelled from the geometry by a value proportional to the distance between their location and the geometry. All wall geometry within the model incorporates this logic in order to avoid collision.

Following this logic, the agent begins at a location provided by its location-target pair and steps iteratively toward its target while navigating the space of the model. These algorithms are written in Python within a Dynamo component. This allows for the recursive execution of the stepping function for each agent. This process repeats until the user-defined number of iterations has been reached.

The tool generates a visual output for the user by drawing a spline between all locations the agent stepped through on their path. This process is repeated for each agent in the simulation. The tool also outputs a two dimensional list of all point locations for each agent. This allows the user to easily incorporate this movement data into their script.

Internet of Plants: Queen’s Clever Garden

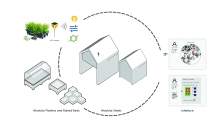

INTERNET OF PLANTS: Queen’s Clever Garden, By Lina Tehari Spring 2017 StudioLab

Living in cities can be problematic for many people, some of these problems can be solved by embedding technology and connectivity into the very fabric of the city. By applying the concept of “Clever City” and utilizing the Internet of Things in both large and small scales, this project addresses the idea of community activities and their impact on improving quality of life for those engaging in these activities.

This project is a design proposal for a series of clever gardens which work as incubators/living labs for improving the quality of life for low income and homeless population of the City of Charlotte’s North End Smart District, by creating a new life routine, learning skills and getting access to healthy food in places that are recognized as food deserts. The gardens serve as a focal point for other communities to be engaged and join in. Most importantly, these gardens expose the problem of homelessness and the immediate needs of those experiencing it, while also providing easy access to healthy food in low income communities.

By integrating affordable and widely available technology into gardens, the production efficiency of these community gardens can be ensured and potentially increased. Each smart garden has different components; 1) Physical components, 2) Interface and 3) Data collection from both the plant sensors and the interface.

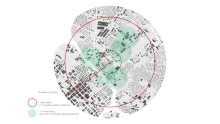

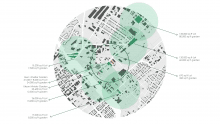

The specification of locations for the garden system is driven by layers of city data. The City of Charlotte has one of the largest open networks of information about city services and demographic information in the U.S. Location of the vacant lots within walking distance of Charlotte Homeless shelters and services were used to initially identify ideal places to locate the gardens.

The physical components of the gardens include modular planters and raised beds and modular sheds, as well as individual sensors that collect soil moisture and temperature, Nitrogen content, and amount of light.

The sheds are the hub for gardeners to interact with the interface, they accommodate tools, and touch screens for the interface, and they work as classrooms for learning skills and connecting to professionals and gardening databases. Each shed is equipped with a weather station and wi-fi. The shed’s building is the simplest modular form that could easily be repeated and moved around. Each garden could have multiple sheds that would accommodate different equipment such as a server room, and refrigerator and packing equipment for the harvest.

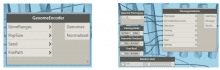

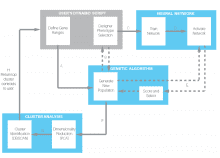

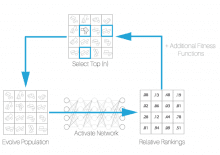

Machine Learning Models For Directed Curation Of Design Solution Space

DesComp Thesis Completed by Christian Sjoberg (2017)- Presented at ACADIA 2017

As the influence of computation on design continues to grow, designers have the ability to optimize and account for high numbers of variables. This results in a high dimension solution space and an exponentially increasing number of possible variations. Optimization strategies enable designers to navigate toward solutions which are quantitatively superior in pre-defined tests, but limit the designer’s ability to search the solution space for qualitative aspects. Fully accepting a quantitatively optimized solution poses an issue for designers. Calculating a top performing design based on limited or standardized criteria reduces the role of the designer. This has the potential to situate design as a secondary layer on calculated models. This workflow limits the ability of designers to holistically consider the aspects of design problems which are abstract or qualitative. To counteract this side-effect, designers may include quantitative descriptions of qualitative criteria into their computational models. In doing so, they are able to constrain the solution space based on their pre-conceived expectations of the possible outcomes. In either situation, the designer is limited in their exploration of the full solution space, and reduces the opportunity to discover emergent properties of designs resulting from unexpected combinations of parameters.

If we are to avoid this, we must re-evaluate the possible methods for navigating vast solution spaces and create models which can evaluate solutions based on both quantitative evaluations and qualitative properties. In the context of this project, the term qualitative is used to describe features of a computationally generated design which are visibly recognizable as favorable to the designer, but are not measured by the designer’s script as an evaluation of the design’s performance. These features can be understood to

be the emergent result of the combined state of many parameters of the design. Due to the abstract and subjective nature of qualitative criteria, designers can benefit from a process of selection of solutions based on their own expertise and intuition rather than the simplification of a problem and constraint of possible solutions.

We may imagine, for example, an artist in the process of making a sculpture. The artist does not explicitly or mathematically define the proper curvature of a surface of the sculpture before they begin to form it. They instead manipulate their design iteratively, and visually evaluate the current state of the form until they recognize that it has taken on the quality they find favorable. As humans, we are far better at visually recognizing a geometry we find to be favorable then we are at explicitly mathematically defining it. We may even find that our original assumptions or what we thought to be ideal changes in the process of creating a design.

The methods that computational designers currently employ to search for design options in a defined parameter space do not represent the inherent artistic processes of the designer, because they usually require the explicit definition of what is acceptable as a solution before the search process ever begins. If we can create software systems that more closely resemble the artist’s evaluation of design qualities we can begin to remove some of the limitations that result from the need to explicitly define criteria at the beginning of our search. To perform meaningful evaluation of qualities subjectively favorable to an individual designer, a system must first create a model of the designer’s evaluation process.

This project seeks to develop and test a method for searching vast solution spaces based on inexplicit evaluation criteria demonstrated by designers using the system.

Machine learning methods allow for the formation of evaluative criteria based on patterns learned from the user’s selection of design options. As designers are iteratively presented with design choices, they will choose the solutions that meet their personal aesthetic, abstract, or qualitative criteria for design. The encoded design parameters for the possible selections as well as the actual user selections are recorded as a training set for an artificial neural network. A network trained on this selection data acts as a model of the user’s evaluation of design options. Using this model as the fitness function for an evolutionary solver provides a mechanism for searching solution spaces containing more design possibilities than could possibly be evaluated individually by a human. Learning from the designer’s evaluation of a sample of the possible solutions, this system demonstrates the ability to identify additional high performing regions of the solution space based on the user’s inexplicit qualitative criteria. Allowing designers to visually identify the presence favorable qualitative features leverages the human’s inherent strengths, while reducing the limitations resulting from the need for explicit limitation of the solution space.

A MACHINE LEARNING APPROACH

The discipline of architecture has explored the computational generation of designs through rule based logics for many years. One of the principle applications of this research has been the task of automated space planning. Charles Eastman’s General Space Planner (1973) is an example of one such system for creating space planning designs using decision tree logic for evaluation. The application of multiple pre-defined constraints to the problem space is used as a method for searching for design solutions (Eastman 1973). These and other similar methods rely heavily on the user and system’s

anticipation of all possible design conditions, and the presence of a pre-programmed response. This top down logic ultimately falls short of representing a human’s process of design, which is one of nuanced reasoning, accumulated experience, and personal intuition. To enable a system to better represent the complex process of design, it must be developed to recognize subtle decisions as well as the greater patterns of designers. The application of a bottom-up approach to learning design constraints has the potential to remove the requirement of explicitly defined design constraints and better represent the designer’s own process. It must be able to form a model of design responses that are not directly related to one specific condition, but to the presence of subtle patterns or combinations of multiple conditions.

The explicit curation of the solution space based on rules which operate at the level of the design parameter dramatically reduces the possibility of discovery of new options. Design is often a process of experimentation in which the designer tries numerous alternative approaches while both formulating and solving a problem. If the tool used by the designer requires them to explicitly define each of the criteria at the onset of the search for a solution, this process of exploration, discovery, and redirection is lost. To create tools which more naturally resemble the designer’s process, we can begin to develop a bottom-up approach to the creation of constraints for the solution space. The collection of a designer’s choices in their own process of curating possibilities to a design problem contains potentially identifiable patterns if we can consistently describe the steps of their process mathematically. Based on this limitation, a system capable of mathematically describing each of the design iterations explored by the designer can potentially form a model of the underlying relationships or patterns present in the set.

Creating an environment for the recording of the designer’s process poses a difficult problem. We must make trade-offs between the freedom provided to the designer and the effectiveness of the system to measure the outputs of their process. Designers working with analog methods such as sketching and physical modeling produce artifacts which are difficult to comparatively evaluate, due to inconsistency in the relative meaning of any one determined variable between one artifact and the next. If, for example a series of drawings are compared which represent the designer’s evolving understanding of a design, there is no guarantee that a specific pixel value is necessarily comparable with the same pixel in another image drawn at another scale, from another angle, or in another medium. For the purposes of this project, it is important to establish a consistent and comparable definition of the objects considered by the system.

The computational processes of architectural designers today are commonly developed within visual scripting platforms. These software platforms allow users with little to no programming experience to create models of the logic they wish to use to produce a design. This practice of scripting design demonstrates a voluntary, explicit definition of the possible parameter space. This project utilizes the user defined parameter space as a means of capturing consistent and comparable descriptions of the design options being considered. In this way, any potential design option within the user defined parameter space can be fully represented by its unique set of parameter values. This allows for simple comparison of one design’s parameter sequence to another. The comparison of a series of designs selected by the designer for the presence of favorable qualitative traits provides the potential for pattern recognition between parameter values and user selection.

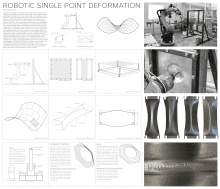

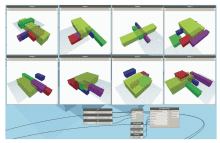

Pinned Incremental Metal Forming

DesComp Thesis completed by Paul Stockhoff (2017)

Slip Mounted Single Point Deformation expands upon the ideas of single point incremental metal forming, using a 6-axis robotic arm, by exploring the possibilities of how sheet metal can be deformed with minimal support bracing. The goal of this technique and research is to develop controlled methods for fabricating precise, double-curved, structural panels. The slip mounted technique requires mounting a piece of material in the vertical plane while only bracing two edges of the sheet. The material in this method is allowed to stretch, flex and twist during forming unlike in traditional incremental metal forming. Single point incremental forming is the process in which a hardened metal stylus is attached to either a robotic arm or CNC machine and then programmed to trace the contours of a shape gradually into a deformed piece of metal, allowing for far more complex shapes than traditional forming methods. While each pass is made the piece of equipment pushes between .3mm – 1mm causing the sheet to deform into the desired geometry. During the development period of the single point incremental forming process, we identified three control variables; tool design, tool path generation, and the deformation limits of 20-gauge cold rolled steel sheets for doubly-curved surfaces. This initial research, along with explorations by others, became the underpinning for the work examined in this paper, where single point incremental metal forming is used to create doubly-curved panels which can create a self-supporting structural surface.